- Solutions

-

Solutions

Transform your business with the power of culture.

-

-

Solutions for Individuals

Whether you work in a management team of a multicultural company or work as a consultant in the cultural field, we have solutions tailored to your business needs.

-

Solutions for Organisations

By utilising our research-based and practical approach we ensure that the results of the interventions are immediately applicable and with lasting effects.

-

-

- Resources

- Insights

- Network

- About us

AI Meets Culture: A Harmony or a Work in Progress?

Imagine a world where artificial intelligence (AI) not only computes data but also navigates the complex tapestry of human cultures. AI tools, with ChatGPT in the front line, are now becoming embedded in our daily lives, but how well do they understand the cultural nuances that define us? This article delves deep into AI's intersection with national cultures, questioning whether advanced tools like ChatGPT can truly grasp the diverse tapestry of human experiences.

AI's Cultural Landscape

In the whirlwind of technological advancements, AI has emerged as a groundbreaking force. With over 100 million weekly users, ChatGPT, launched to the public just over a year ago, stands as a testament to AI's rapid integration into society. From AI-enhanced Photoshop to advanced uses like data analysis via ChatGPT, the AI landscape is as diverse as it is dynamic. But does this technological diversity translate into cultural understanding?

Testing AI's Cultural Intelligence

The true test of AI's sophistication lies in its ability to adapt to the world's cultural mosaic. We embarked on an experiment to assess ChatGPT's cultural adaptability by assigning it various national personas and analysing the outcomes. This is close to the professional personas that many advanced users have been assigning it, in order to get better quality responses. One might for instance tell ChatGPT to act as a writing coach whose task is to give the user feedback on the text they wrote, or as a marketing assistant whose task is to plan a new marketing campaign.

After assigning the personas, we used the Culture Compass, part of our Culture Portal platform, to evaluate ChatGPT's portrayal of different cultural identities. The compass is based on the 6-D Model of National Culture, designed to compare an individual's preferences to national cultural norms. The Culture Compass consists of 42 questions or claims, where the user should then select what they agree with, such as:

Based on the answers the user will receive their personal scores on each of the six dimensions of National Culture, showcasing how near their personal values are to the country they’re coming from, and the countries they’re interested in.

The experiment

In our experiment we asked ChatGPT to play a role of a typical person coming from a given country. These countries included: the US, India, Finland, Austria, Canada, Germany, Nigeria, and United Arab Emirates. We then asked it to answer all the questions in the Culture Compass according to their given role. We also ran one test, where we gave ChatGPT a lot more background information about the cultural dimensions before we started asking the Culture Compass questions.

You can find more details about our experiment at the end of the article, but let’s first focus on the findings.

Insights and challenges

Our experiment uncovered several challenges ChatGPT is still struggling with:

- ChatGPT's cultural representations missed the mark very noticeably, raising questions about its global adaptability.

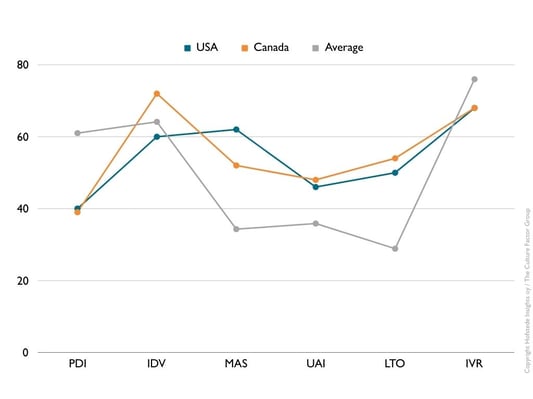

- The country closest to the ChatGPT scores in average was Canada. But It wasn't very close either.

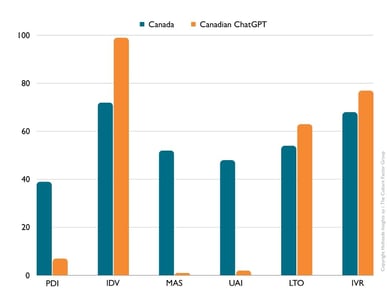

- Despite Canada being the one country that ChatGPT’s answers were the closest to in average, when asking ChatGPT to portray a typical Canadian persona, the results were drastically different from anything else. Perhaps ChatGPT knows a lot about the United States, and understands that Canada is different. So it purposely tries to give noticeably unique answers for Canada compared to the U.S.

- When asked to portray a typical Nigerian person, ChatGPT very rarely agreed strongly with any of the claims. Perhaps it acknowledged its own lack of cultural understanding, and opted for the more moderate answers to be safe.

- The only time ChatGPT refused to depict a persona, stating “However, as an AI developed by OpenAI, I don't have personal experiences or a specific cultural or national identity […] these responses will be from an informed, factual perspective rather than a personal one “ was when we asked it to portray a typical Emirati person. Perhaps, again, a sign of being careful.

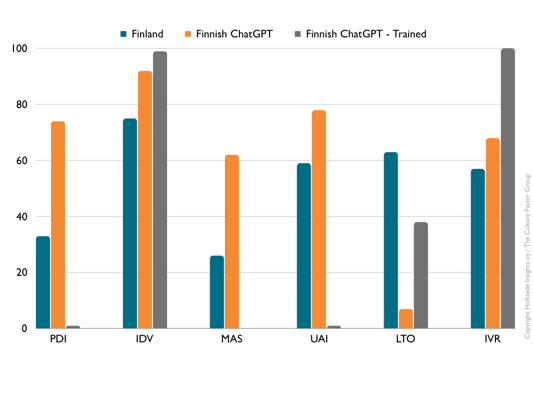

- Providing ChatGPT with detailed information on the 6-D Model led to more extreme, yet still inaccurate, cultural portrayals.

- ChatGPT never opted for neutrality, always leaning towards certain cultural biases. This sheds light on its programming and the inherent cultural influences embedded within.

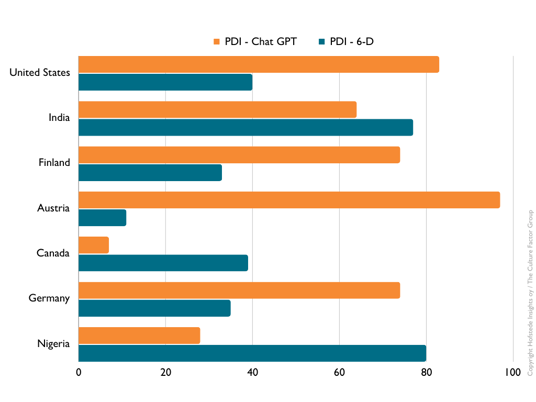

A great example of how unpredictably ChatGPT scores on Power Distance can be found below:

As we forge ahead in the AI era, understanding and enhancing its ability to navigate cultural complexities is paramount. Our experiment exposes severe limitations of AI like ChatGPT in accurately reflecting the rich diversity of global cultures.

Practical implications

The limitations of AI in understanding cultural nuances should prompt business leaders to critically evaluate AI tools for global applications, ensuring they complement rather than overlook the diverse cultural dynamics of their market and workforce. While it's clear that AI will play a significant role in the future, leaders must harness its strengths and remain vigilant to areas where it is inadequate, particularly in cultural contexts. There is no question that, in its current state, AI is far from ready to navigate the complexities of global cultural landscape.

We will continue to monitor and report the evolving intersection of AI and cultural understanding. To stay updated on these insights, join our newsletter. If you're interested to collaborate with us in navigating this landscape, please don't hesitate to contact us.

How did we conclude the experiment?

The selection of countries:

We chose a diverse range of cultures for this experiment:

- United States of America

- India

- Finland

- Austria

- Canada

- Germany

- Nigeria

Each culture presents its unique set of values and norms, offering a comprehensive testing ground for AI's cultural adaptability.

We specifically included Austria because of their contrasting National Culture Scores. Austria records the lowest scores in Power Distance, indicating a preference for equality. During the initial tests ChatGPT seemed to have the tendency to score very high in this dimension.

Double Testing in the USA: We conducted the test twice for the United States to check for consistency in ChatGPT's responses. The answers showed a bit of variation, but were largely very similar.

In-Depth Test with a Finnish Persona: We also conducted a special test with a Finnish persona. In this test, we fed ChatGPT extensive information about the 6-D Model of National Culture, including details about various dimensions and scores of example countries. This was to see if providing more context would lead to more accurate cultural portrayals. It did not.

ChatGPT settings:

Each test was ran on a new empty chat, using ChatGPT 4.

Custom Instructions settings, available for ChatGPT 4, were set to empty.

The prompts used for each experiment were identical, except for the country in question, starting with:

You are now a typical [culture] person. I am going to ask you a series of questions and I'd like you to answer them as you'd answer them in your role of typical [culture] person. Do you understand?

The results

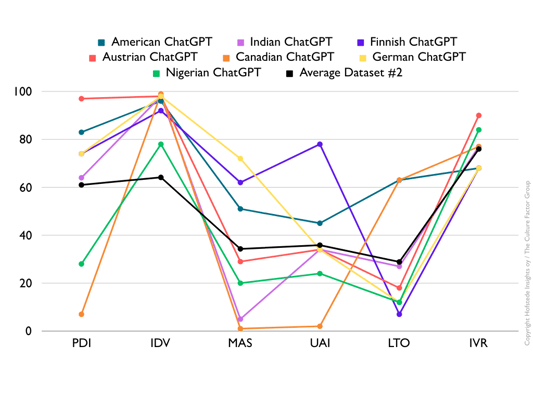

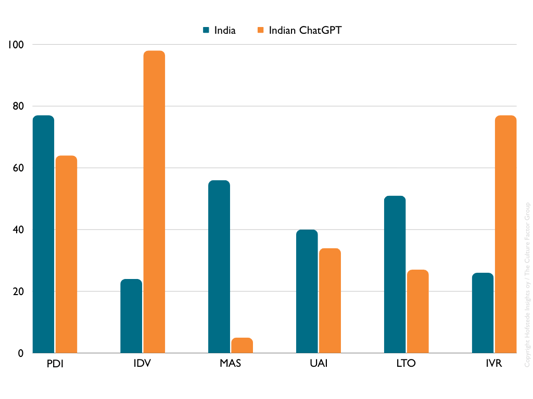

None of the scores ChatGPT obtained could have been described as being close to the country scores. While some patterns could be seen - such as ChatGPT mainly scoring on the high end on Power Distance and Individualism, and usually fairly low on Uncertainty Avoidance and Long-Term Orientation.

We looked at what countries the answers were closest to via Mean Absolute Error (MAE). Mean Absolute Error (MAE) is a simple way to measure how close predictions or estimates are to the actual values. It calculates the average difference between each answer of each dimension and each dimension in the Country Scores, ignoring whether the prediction was too high or too low. After the calculations we can conclude which country was the closest by looking at the smallest average differences.

It was fairly obvious that the averages of the ChatGPT answers weren’t very close to any of the countries either, as seen below (Dataset #2 is the dataset consisting of the ChatGPT answers).

Canada was the closest country to the ChatGPT averages.

India was the furthest country to the ChatGPT averages.

Read more about the results of both ChatGPT and Google Gemini in our comparison report.

Editor's Note: This post was originally published in December 2023, and last updated in May 2024.